High Availability inside the Datacenter: In Leaf/Spine VXLAN based data centers, everyone likes to provide HA with Active/Active in it, so choices are different. There are two types of HA in data centers, Layer 3 and Layer 2. For layer 3 HA, always there is more than one spine that can provide ECMP and HA at same time. However, Layer 2 redundancy for hosts and l4-l7 services that connected to leafs are more than an easy choice.

As Cisco provided vPC for nearly 10 years ago, almost this was the first (and only) choice of network engineers. Also, other vendors have their own technologies. For example, Arista provided Multi-chassis Link Aggregation (MLAG) for L2 HA in leafs. But, there is always a problem in implementation of them. One example in vPC is “peer-link” that is an important component in the vPC feature.

However, it can be a tough one in most cases like Dynamic Layer-3 routing over vPC or Orphan members that may cause local traffic switching between vPC peers without using Fabric links. To address the “peer-link” issue, there is a “fabric-peering” solution that uses Fabric links instead of “peer-link” and convert it to “virtual peer-link”. With this solution there is no concern about local switching in specific cases.

This solution works better, but it cannot solve Dynamic Layer-3 routing over vPC or other issues (PIP, VIP, virtual-rmac) without enhancements. There is another HA solution that you can find it below.

EVPN Multihoming

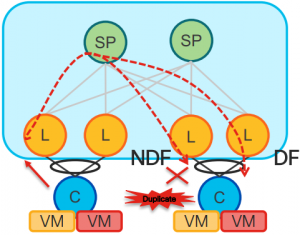

With introducing EVPN, EVPN Multihoming is a solution to bring HA in layer 2 links without any “peer-link” like dependency. EVPN uses Ethernet Segment Identifier (ESI) in Ethernet Auto-discovery (EAD) or route type 1 with 10 bytes value. ESI is configured under bundled link that sharing with multihomed neighbor. ESI can be manually configured or auto-derived.Also, to prevent loop scenarios because of packet duplication in multihoming scenarios, Ethernet Segment Route (ESR) is the other route type (4) that mainly uses for designated forwarder (DF) election. DF is election for a forwarder that handles BUM traffic. Also, Split-horizon feature enables only remote BUM traffic allowed to be forwarded to a local site and BUM traffic from same ESI group will be drop. With using EVPN multihoming, traffic will be balance between both leafs because they are advertising a shared system MAC with same ESI.

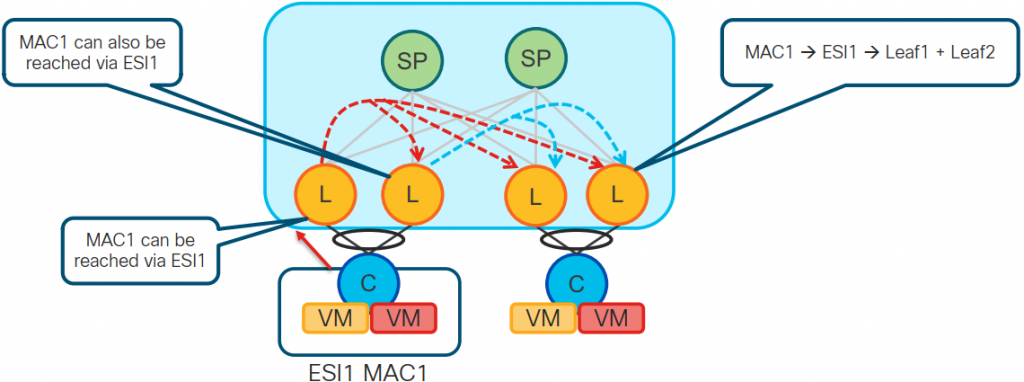

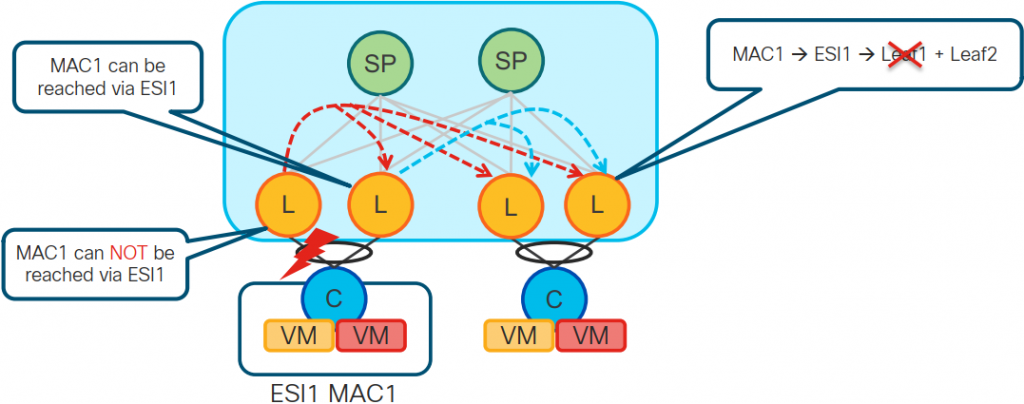

This feature is called “Aliasing”. In failure scenarios, with “Mass Withdrawal” fast convergence will remove failed leaf from ECMP path list. Also, LACP can be turned on to prevent ESI misconfigurations.

Designated Forwarder

MAC Aliasing

MAC Mass Withdrawal

| vPC/vPC2 | EVPN Multihoming | |

| Hardware | All Nexus platforms | Nexus 9300 Only (until now) |

| FEX supported | Yes | No |

| Same OS version | Yes | Not mentioned |

| Multiple components | Yes | No |

| QoS needed | For vPC2(fabric peering) | No |

| ISSU | Yes | No |

| Maximum peers | 2 | 2+ |

Load Balancing

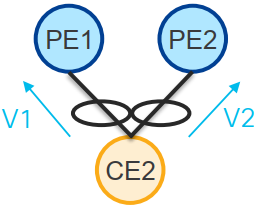

vPC is using regular port-channel load balancing methods. On the other hand, EVPN provides modern load balancing. There is three EVPN load balancing method available: 1-Single Active 2- All active 3- Port Active. Assume two leafs are connected to one host, but only one of them we are considering to be active for a service. So, related MAC address is reachable via only one of leafs and it refers to per-VLAN (service) load balancing too. This method is suitable for billing some services or policing on specific traffic.

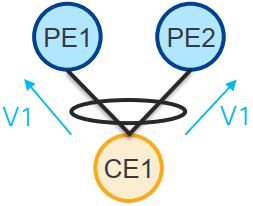

In All active mode, each leaf can forward traffic. In this mode, load balancing method is per-flow. This is regular load balancing mechanism that share traffic between leafs as below figure. This method is better for providing more bandwidth based on end hosts.

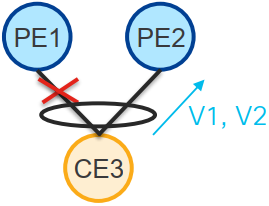

Port Active is a mechanism that brings Active-Standby scenario to EVPN multi-homing and only one leaf forwards traffic. However, in failure scenarios with fast convergence, traffic will switch to standby leaf. This method is your choice when you want to force traffic on a specific link that is cheaper or you want to use only one link.

This is important that every EVPN feature in this post is not implemented on all platforms and vendors. To recapitulate, both solutions have cons and pros. Depend on Data Center design and requirement, you can choose one of solutions. Keep in mind that you can not enable both feature on a switch at a same time. Also, LACP is an additional tool to improve these features functionality and it helps avoiding misconfigurations. note: all figures are taken from ciscolive presentations